Addressing the challenge of effectively processing long contexts has become a critical issue for Large Language Models (LLMs).

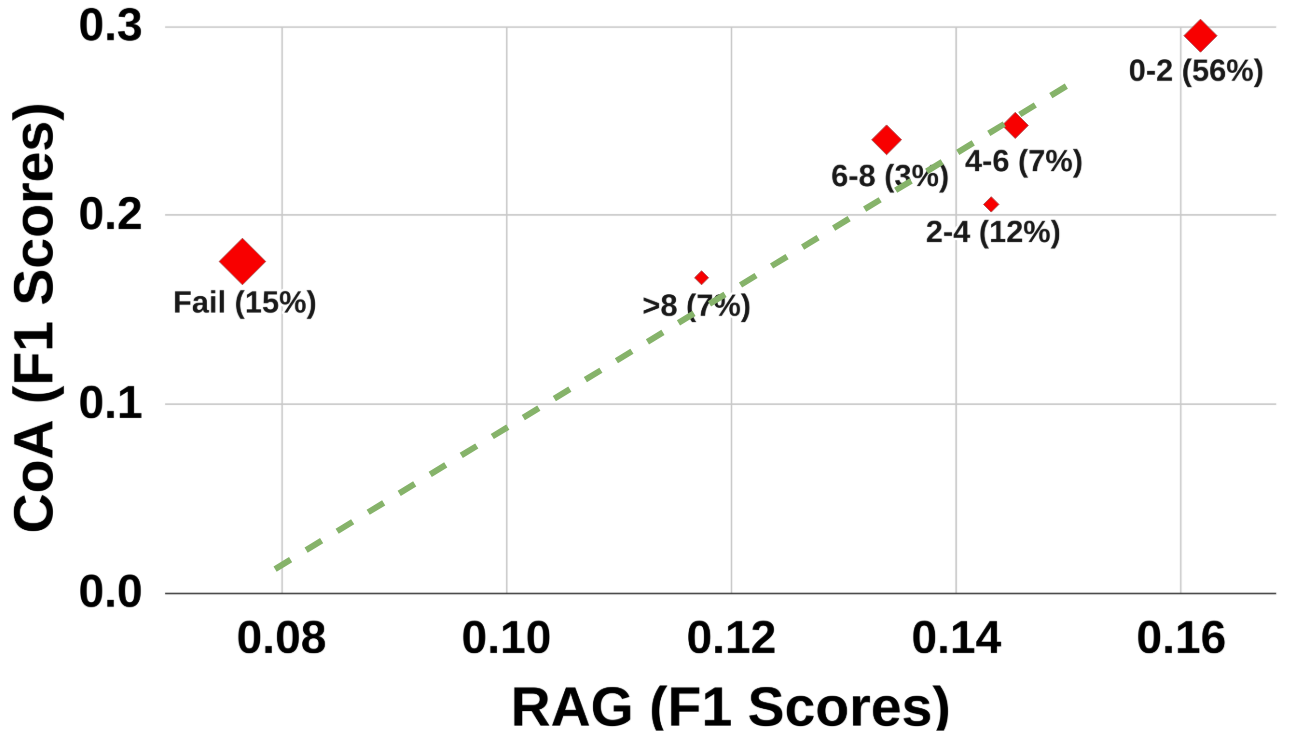

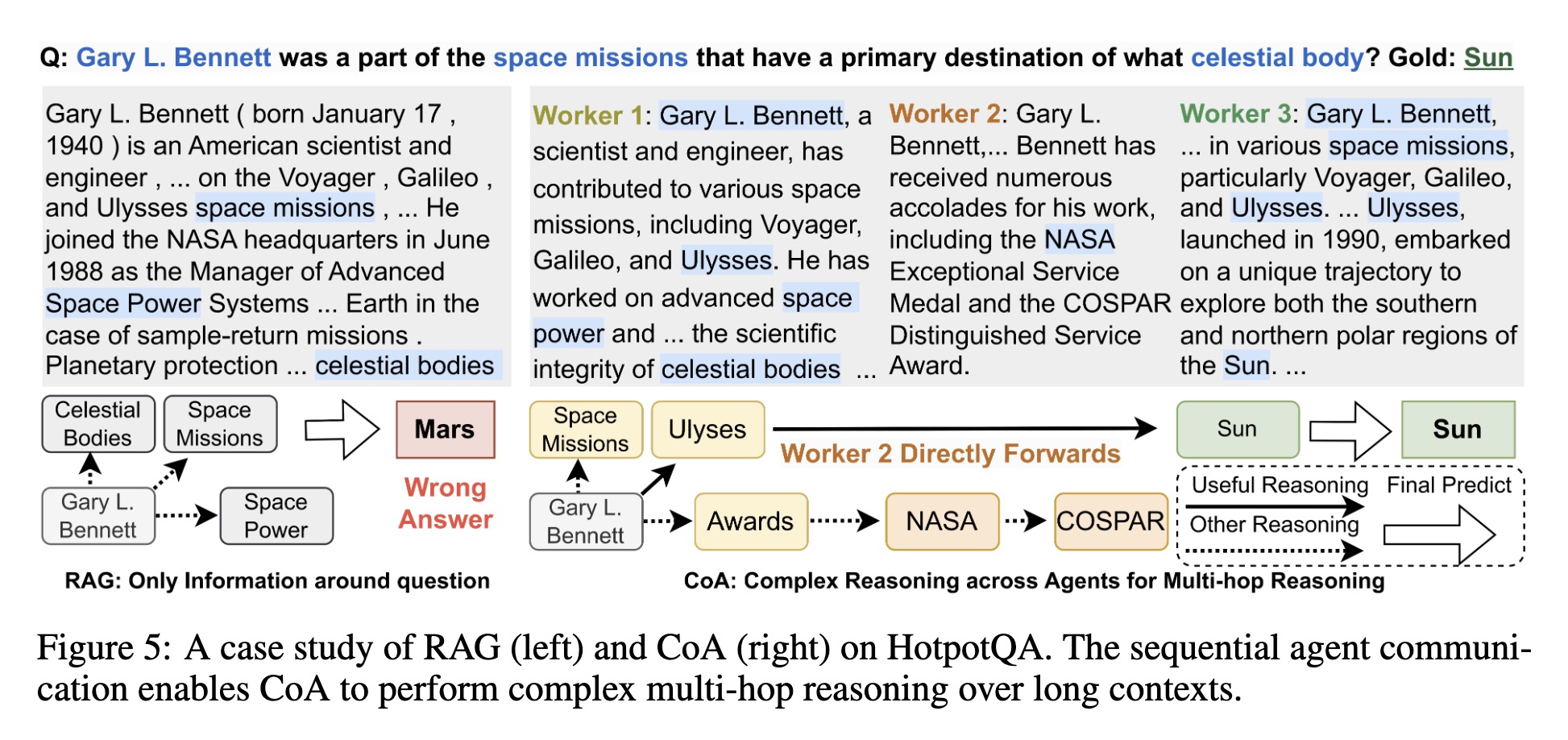

Two common strategies have emerged: 1) reducing the input length, such as retrieving relevant chunks by Retrieval-Augmented Generation (RAG),

and 2) expanding the context window limit of LLMs. However, both strategies have drawbacks: input reduction has no guarantee of covering

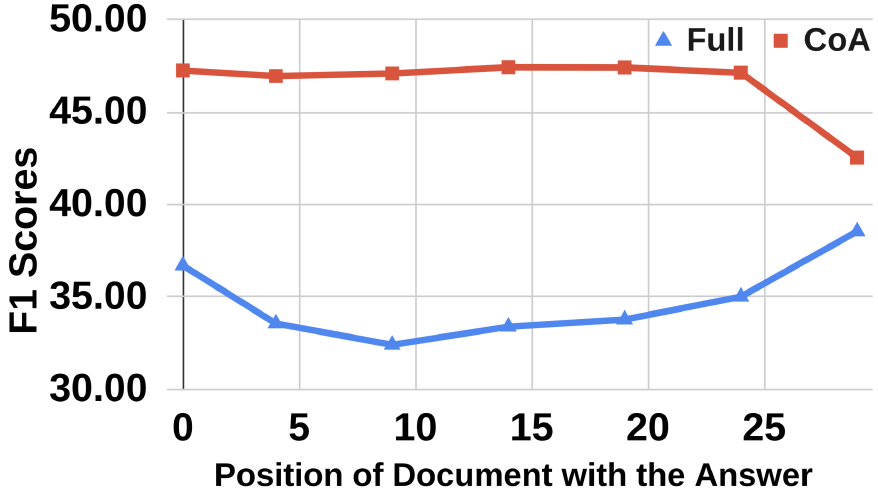

the part with needed information, while window extension struggles with focusing on the pertinent information for solving the task.

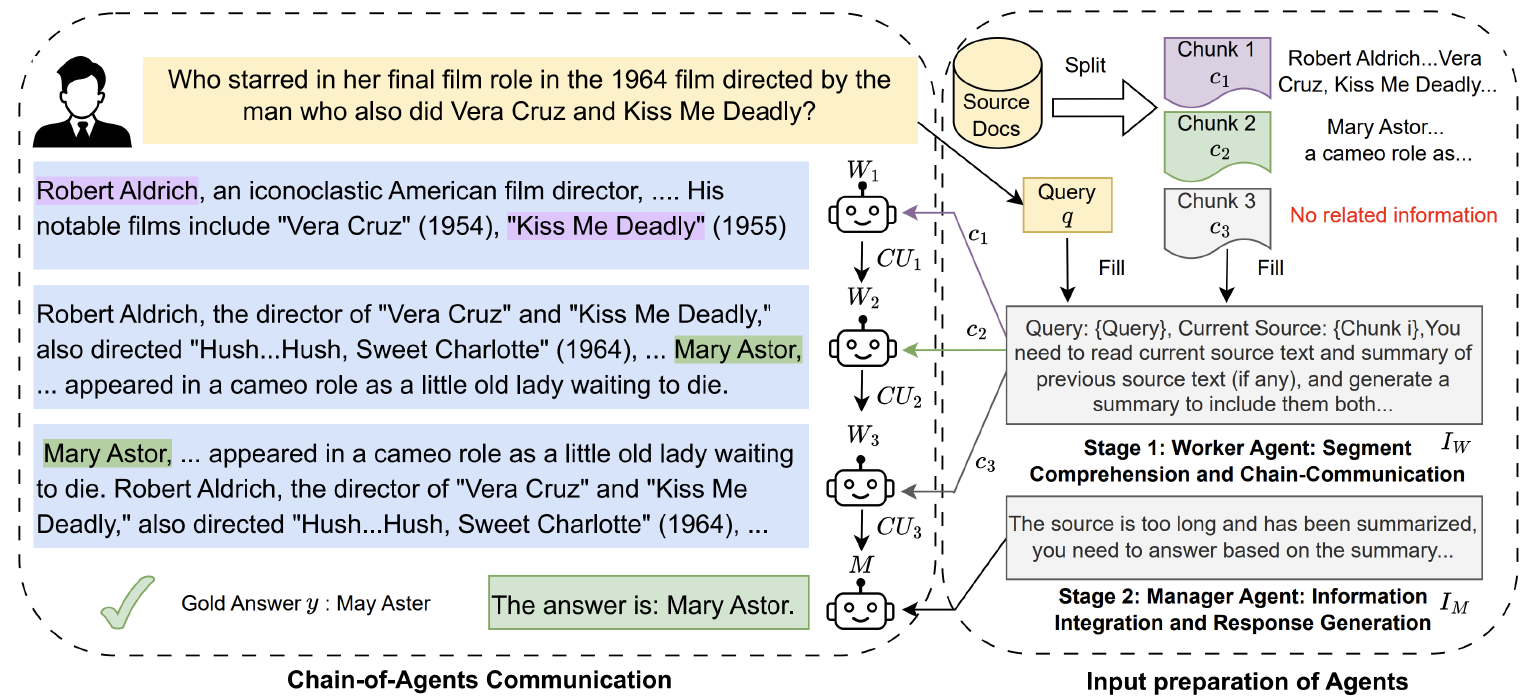

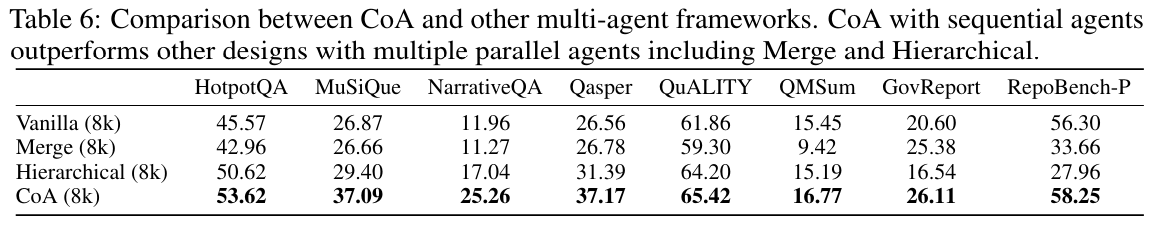

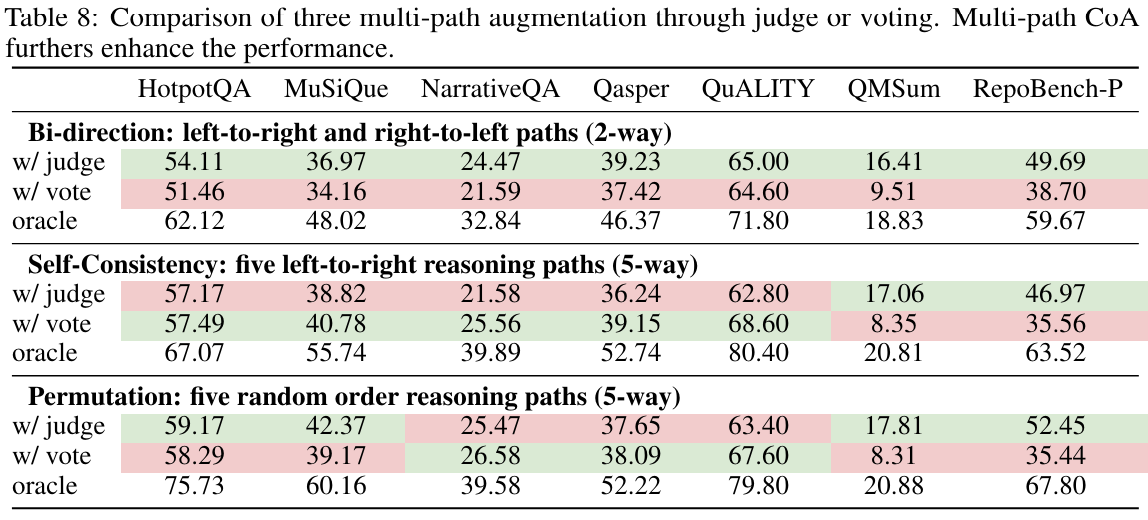

To mitigate these limitations, we propose Chain-of-Agents (CoA), a novel framework that harnesses multi-agent collaboration through natural

language to enable information aggregation and context reasoning across various LLMs over long-context tasks.

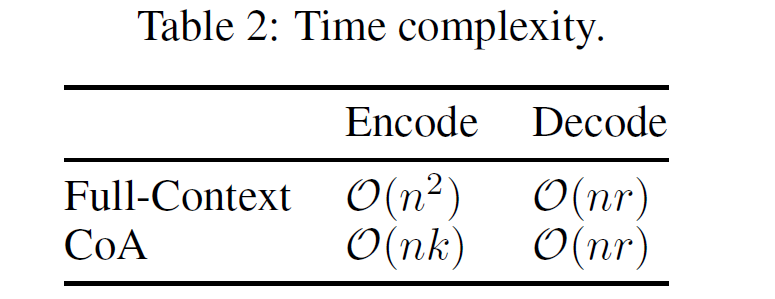

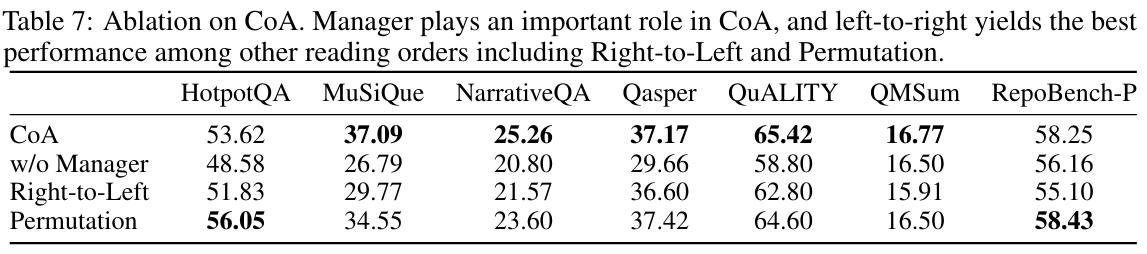

CoA consists of multiple worker agents who sequentially communicate to handle different segmented portions of the text, followed by

a manager agent who synthesizes these contributions into a coherent final output.

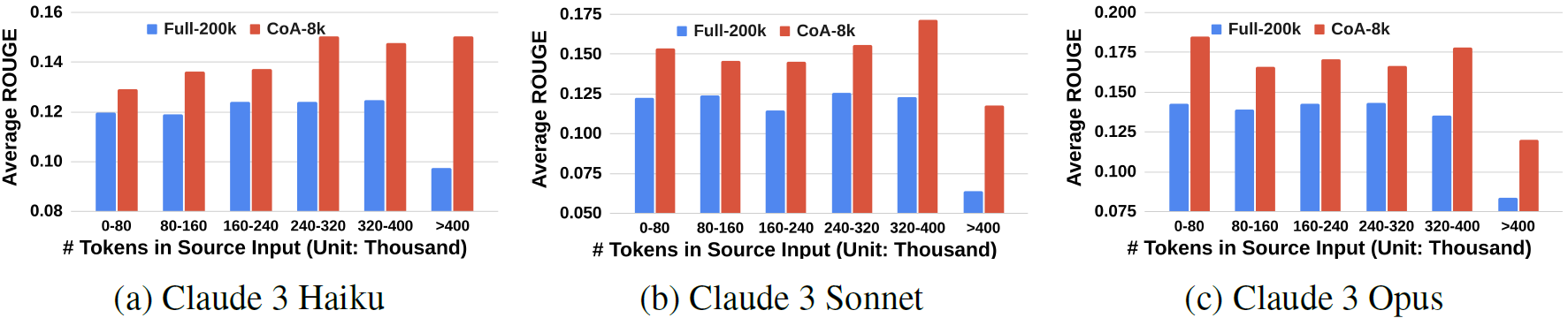

CoA processes the entire input by interleaving reading and reasoning, and it mitigates long context focus issues by assigning each agent

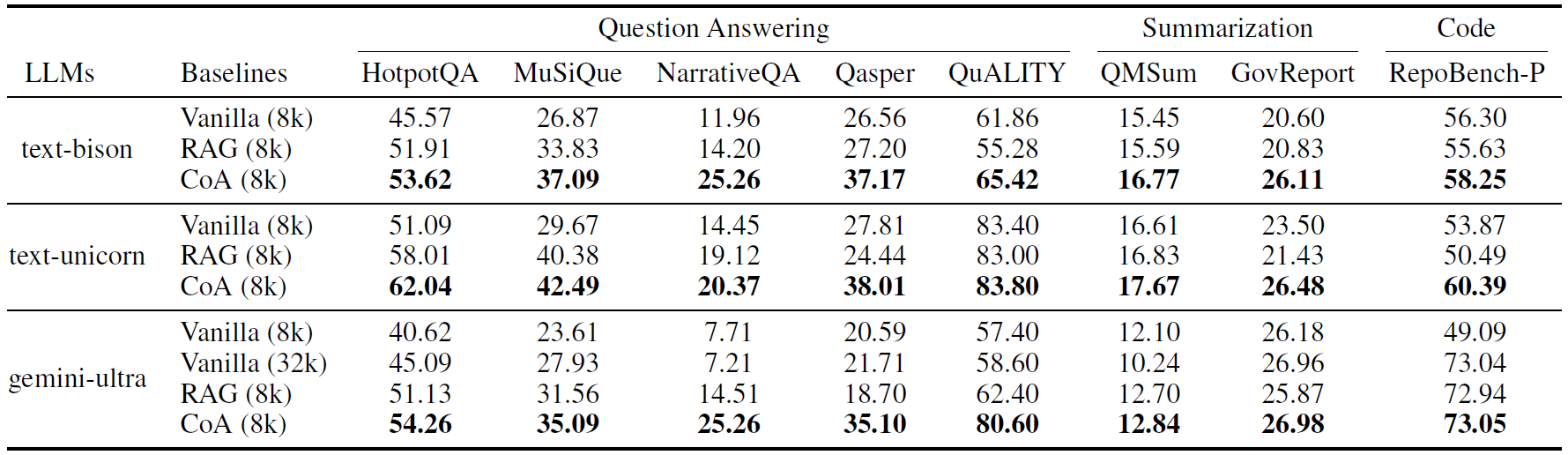

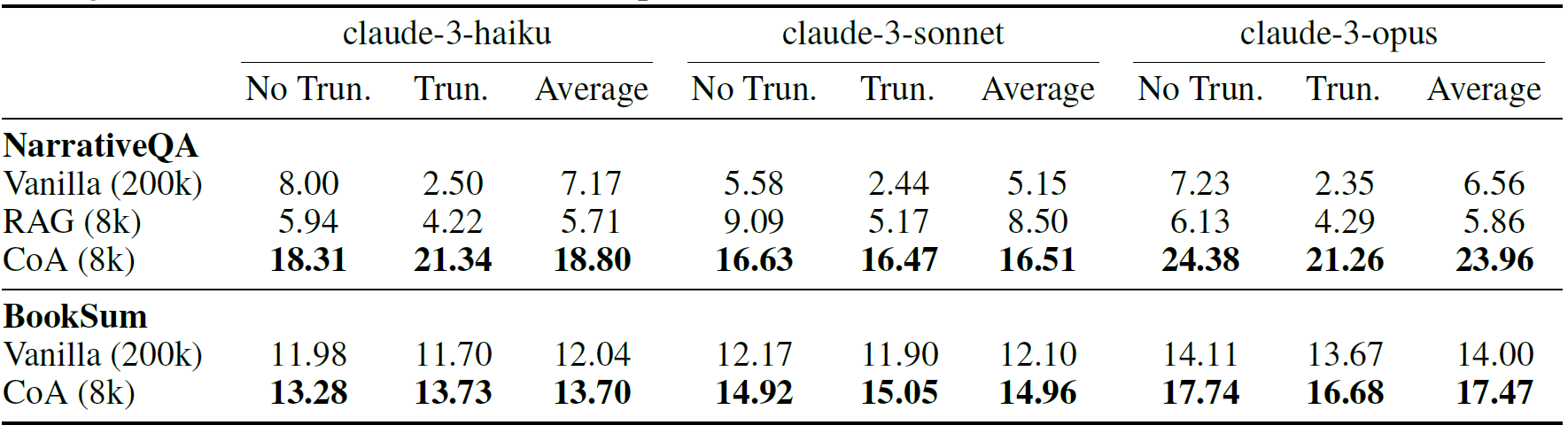

a short context. We perform comprehensive evaluation of CoA on a wide range of long-context tasks in question answering, summarization,

and code completion, demonstrating significant improvements by up to 10% over strong baselines of RAG, Full-Context, and multi-agent LLMs.